| Pages:

1

2 |

Yttrium2

Perpetual Question Machine

Posts: 1104

Registered: 7-2-2015

Member Is Offline

|

|

Using ChatGPT to answer chemistry questions

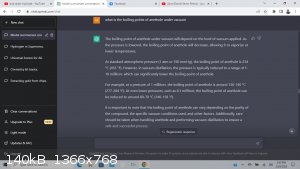

I normally get a single boiling point when doing a search, but I put in a few keywords, and this was able to tell me a few different temperatures at

which I could pull off anethole under varying vacuum levels.

the chatgpt is pretty intuitive. --

you can say

"elaborate on the second paragraph"

and it will.

-

but I found it useful in getting a few points at which I could shoot for under vacuum in a snap. Much quicker than I could here. -- Granted its not

always right, but -- it can help tremendously.

[Edited on 2/24/2023 by Yttrium2]

Edit by Texium: changed title for clarity

[Edited on 5-2-2023 by Texium]

|

|

|

Yttrium2

Perpetual Question Machine

Posts: 1104

Registered: 7-2-2015

Member Is Offline

|

|

|

|

|

Texium

Administrator

Posts: 4580

Registered: 11-1-2014

Location: Salt Lake City

Member Is Offline

Mood: PhD candidate!

|

|

I would never trust information delivered by an AI without a citation. If it cited its sources I’d be more impressed.

|

|

|

Twospoons

International Hazard

Posts: 1324

Registered: 26-7-2004

Location: Middle Earth

Member Is Offline

Mood: A trace of hope...

|

|

I wouldn't trust it either. I asked it to create some Excel code, which it did. On close inspection there were some oddities, so I challenged it, it

apologized, and gave 'corrected' code which was also odd. Challenged again - it apologized again and changed the code again. I gave up at that point.

Helicopter: "helico" -> spiral, "pter" -> with wings

|

|

|

Loptr

International Hazard

Posts: 1348

Registered: 20-5-2014

Location: USA

Member Is Offline

Mood: Grateful

|

|

Don't trust ChatGPT. It generates words based on sub-word relationships, nothing more. It writes text that reads well, but anything that has a

structure below language rules, good luck.

We are working with it and others at work, and have found that LLMs have a limited future as far as what they can do by themselves without the

combination with other techniques. One of my projects uses LLMs for synthesis of large amounts of background discussion for training purposes. It's

pretty cool what it's capable of, but don't be fooled.

"Question everything generally thought to be obvious." - Dieter Rams

|

|

|

j_sum1

Administrator

Posts: 6320

Registered: 4-10-2014

Location: At home

Member Is Offline

Mood: Most of the ducks are in a row

|

|

For those interested, Tom Scott on YT made quite a nice video about this topic, what the implications are and how well it managed to write code for a

project he had. Worth the 10 minutes or so to watch.

|

|

|

BingTinsley

Harmless

Posts: 2

Registered: 24-11-2022

Member Is Offline

Mood: Always learning

|

|

ChatGPT "forgot" that the creation of diazonium salts most often (always?) requires in situ preparation of nitrous acid. And to make your HNO2 in a

solution containing a nitrite, you'll need to add some strong acid (HCl usually). The way that it ends with "thank you for bringing this to my

attention" gives me pause.

Oh, and it "advised" me that one can produce potassium hydroxide from potassium chloride and calcium hydroxide by the following equation:

2KCl + Ca(OH)2 → CaCl2 + 2KOH

Don't go blindly running reactions from ChatGPT - it can be a good tool to point you in the right direction, or to do the heavy lifting for your math

needs, but it doesn't give citations and it is posesses the ability to be wrong in many cases.

[Edited on 7-3-2023 by BingTinsley]

|

|

|

Neal

Hazard to Others

Posts: 143

Registered: 24-12-2021

Location: Chicago, IL, USA.

Member Is Offline

|

|

Is this the 1st ChatGPT thread in this forum? I just started a few days ago.

I was gonna start my own ChatGPT thread, but maybe we can share. What do you guys think of this:

How does crystal field theory explain why KMnO4 is purple, and how does ligand theory explain why KMnO4 is purple?

-

Crystal field theory and ligand theory are two theoretical models that are used to describe the electronic properties of transition metal complexes.

These models can be used to explain why KMnO4 is purple.

According to crystal field theory, the color of a transition metal complex is determined by the energy difference between the d orbitals of the metal

ion. When light is absorbed by a complex, an electron in a lower energy d orbital is excited to a higher energy d orbital. The color of the complex is

determined by the wavelength of light that is absorbed, which corresponds to the energy difference between the d orbitals. In KMnO4, the Mn(VII) ion

is in an octahedral environment, and the purple color is due to the energy difference between the d orbitals of the Mn ion.

On the other hand, according to ligand field theory, the color of a transition metal complex is also determined by the interaction between the metal

ion and the ligands. The ligands can affect the energy levels of the d orbitals of the metal ion, and the color of the complex is determined by the

energy difference between the highest occupied molecular orbital (HOMO) of the ligands and the lowest unoccupied molecular orbital (LUMO) of the metal

ion. In KMnO4, the ligands are oxygen atoms from the permanganate ion, and the purple color is due to the interaction between the Mn ion and the

oxygen ligands.

Overall, both crystal field theory and ligand field theory can be used to explain why KMnO4 is purple. Crystal field theory explains the color in

terms of the energy difference between the d orbitals of the Mn ion, while ligand field theory explains the color in terms of the interaction between

the Mn ion and the oxygen ligands in the permanganate ion.

|

|

|

Fulmen

International Hazard

Posts: 1716

Registered: 24-9-2005

Member Is Offline

Mood: Bored

|

|

Exactly. It does not distinguish between language and facts, so it's a lot like a politician.

We're not banging rocks together here. We know how to put a man back together.

|

|

|

Texium

Administrator

Posts: 4580

Registered: 11-1-2014

Location: Salt Lake City

Member Is Offline

Mood: PhD candidate!

|

|

Well it’s not wrong, but it also doesn’t tell you anything specific about permanganate. The information it gives is very generalizable, see:

| Quote: | How does crystal field theory explain why KMnO4 is purple, and how does ligand theory explain why KMnO4 is purple?

-

Crystal field theory and ligand theory are two theoretical models that are used to describe the electronic properties of transition metal complexes.

These models can be used to explain why [complex] is [color].

According to crystal field theory, the color of a transition metal complex is determined by the energy difference between the d orbitals of the metal

ion. When light is absorbed by a complex, an electron in a lower energy d orbital is excited to a higher energy d orbital. The color of the complex is

determined by the wavelength of light that is absorbed, which corresponds to the energy difference between the d orbitals. In [complex], the [metal]

ion is in an octahedral environment, and the [color] color is due to the energy difference between the d orbitals of the [metal] ion.

On the other hand, according to ligand field theory, the color of a transition metal complex is also determined by the interaction between the metal

ion and the ligands. The ligands can affect the energy levels of the d orbitals of the metal ion, and the color of the complex is determined by the

energy difference between the highest occupied molecular orbital (HOMO) of the ligands and the lowest unoccupied molecular orbital (LUMO) of the metal

ion. In [compex], the ligands are [ligand], and the [color] color is due to the interaction between the [metal] ion and the [ligands].

Overall, both crystal field theory and ligand field theory can be used to explain why [metal] is [color]. Crystal field theory explains the color in

terms of the energy difference between the d orbitals of the [metal] ion, while ligand field theory explains the color in terms of the interaction

between the [metal] ion and the [ligands] in the [complex] ion. |

It’s practically tautological. According to crystal field theory and ligand field theory KMnO4 is purple because these theories determine that KMnO4

should be purple. The only information that it is giving you is the simplest textbook overview of CFT and LFT, which you would have gotten in greater

detail if you had gone and read a textbook as I had suggested in your other thread, instead of asking an AI for spoonfeeding! Moreover, the answer

ignores some specifics about permanganate that differ from more typical complex ions.

|

|

|

ShotBored

Hazard to Others

Posts: 124

Registered: 19-5-2017

Location: Germany

Member Is Offline

Mood: No Mood

|

|

It’s practically tautological.[/rquote]

Who on this board has machine learning/AI experience in some form? Maybe the ChatGPT framework could be refined by a few people with the programming

knowledge to assist with SciFinder or searching other scientific repositories and/or forums for helpful information? Would be a hell of a undertaking

for the hobbyist chemist(s) tho

|

|

|

Jenks

Hazard to Others

Posts: 163

Registered: 1-12-2019

Member Is Offline

|

|

Quote: Originally posted by Loptr  | | Don't trust ChatGPT. It generates words based on sub-word relationships, nothing more. It writes text that reads well, but anything that has a

structure below language rules, good luck. |

It must be more than that. I asked it a few questions I knew the answer to and it gave reasonable answers, containing (as did the one starting this

thread) information that wasn't inherent in the question. Maybe it is just pulling up Wikipedia pages, but the information is coming from somewhere.

|

|

|

Neal

Hazard to Others

Posts: 143

Registered: 24-12-2021

Location: Chicago, IL, USA.

Member Is Offline

|

|

I read in the manual that it does not connect to the Internet? Which must be false. It was able to provide statistics on which police departments in

the U.S. kills the most, and it can only do that from connecting to the Internet?

|

|

|

Loptr

International Hazard

Posts: 1348

Registered: 20-5-2014

Location: USA

Member Is Offline

Mood: Grateful

|

|

Quote: Originally posted by Jenks  | Quote: Originally posted by Loptr  | | Don't trust ChatGPT. It generates words based on sub-word relationships, nothing more. It writes text that reads well, but anything that has a

structure below language rules, good luck. |

It must be more than that. I asked it a few questions I knew the answer to and it gave reasonable answers, containing (as did the one starting this

thread) information that wasn't inherent in the question. Maybe it is just pulling up Wikipedia pages, but the information is coming from somewhere.

|

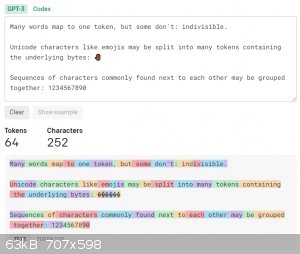

The actual words are coming from what is called an embedding, which is essentially its vocabulary in simplified terms. When the model is being

trained, the training code splits the incoming words into tokens using Byte-Pair Tokenization, which causes sub-word boundaries to be established. You

can check the attached image, or this site: https://platform.openai.com/tokenizer. These sub-word boundaries, or tokens, as they are referred allows words to be generated that are much more

fluent in the given context, compared to word level embeddings that end up with weird combinations and tenses of words in the output. They become part

of a inconceivably massive conditional probability tensor that is used to represent the conditional probability of the first token to the second token

to the third token, and on and on and on, until it gets to the end of the tokens you have provided as input. Once it reaches the end of your input, it

is then in text completion mode, which determines the next character most likely to come next based on all the combined probabilities before it. All

it has to do is pick from the top of the list, or in cases where the next token isn't the definitive, all it has to do is randomly pick one. It builds

a complete sentence by asking for the next token based on all the previous over and over again until it reaches a output limit or receives a stop

code. That stop code be the result of multiple things, such as no known next token (unlikely for large LLMs), or if the neural network has been

trained with a strong sense of beginnings and endings of things (simplified).

You might be saying to yourself that this doesn't explain how a meaningful sentence is produced. The answer lies with the law of large numbers, and

the fact that human language isn't as unique, mysterious, or predictable as you think. There is so much communication that you witness in your daily

life that flies under your radar that makes absolutely no sense, but you just filter it out or accept it. What's amazing about training on that much

data is that emergent relationships between words, groups of words, and context start to present themselves, which is why it seems that ChatGPT is

intelligent, but it is only conditional probability on a massive scale.

Once the tokens have made their way to the output side of the neural network, they are decoded using the embeddings to get the final forms of the

words that are returned to you. There are no actual words within the LLM models, nor do they look up data from the internet. All of its knowledge is

contained within the weights of the matrices representing the hidden layers of the neural network. It is amazing... not going to lie, but not magic.

Once you understand how it works it loses its awe.

You could seriously equate an LLM with a very large Decision Tree.

Caveat: Yes, you can train an LLM within a much larger system to interpret requests and convert them into commands that are then fed to another

piece of software that can fetch the data, which can then be either returned to the user, or fed back into an LLM to generate a summary for the user.

I believe that Bing Chat AI is taking this approach since it seems to have the abilities to access internet data.

"Question everything generally thought to be obvious." - Dieter Rams

|

|

|

Jenks

Hazard to Others

Posts: 163

Registered: 1-12-2019

Member Is Offline

|

|

Quote: Originally posted by Loptr  | | There are no actual words within the LLM models, nor do they look up data from the internet. All of its knowledge is contained within the weights of

the matrices representing the hidden layers of the neural network. |

How does it get physical data then? Here is a simple example:

What is the atomic weight of cobalt?

The atomic weight of cobalt is 58.933195.

|

|

|

Loptr

International Hazard

Posts: 1348

Registered: 20-5-2014

Location: USA

Member Is Offline

Mood: Grateful

|

|

Quote: Originally posted by Jenks  | Quote: Originally posted by Loptr  | | There are no actual words within the LLM models, nor do they look up data from the internet. All of its knowledge is contained within the weights of

the matrices representing the hidden layers of the neural network. |

How does it get physical data then? Here is a simple example:

What is the atomic weight of cobalt?

The atomic weight of cobalt is 58.933195. |

There is a strong relationship within the weights/embeddings from the data it has ingested that has stated the atomic weight of cobalt is that value.

There is also a high probability of it eventually generating the wrong weight in some of its outputs.

I am telling you, though. It seems like magic, but its not. It is the size of the data that has been ingested along with the law of large

numbers/conditional probability. It's borderline emergent phenomena to us because our brains can't comprehend the all the shifts from probability

calculations through the hidden layers that lead to this output.

Haven't you heard how they are saying that even the people that have created these neural networks can't explain how they are so good at producing

meaningful output? It's because there is no secret.. there is no legible knowledge put inside the model. All of its knowledge is represented by an

extreme overlay of probabilities over a large number of artificial neurons that either activate, or dont, and the summation of all of those operations

spits out values that map to the token embeddings that can then be converted back to words.

It is all literally vectors and matrices.

I myself have written a small GPT neural network that I trained on a Shakespeare dataset, and it is able to do text completion on my prompts. I begin

by making a statement about something and not finishing it, and based on my input, it is able to filter all possible solutions to what could come

next, and then what remains can be selected based on its probability, random number, or even with a seed value to produce reproducible output that

follows the seed, which would be useful in giving the output a fingerprint. They are currently trying to implement this to give tools the ability to

detect whether text was generated by XYZ AI tool. There are also stochastic methods for detection, but this is also being pursued.

I have attached a couple papers for your reference on how this all works, along with a blog entry that breaks it down.

[Edited on 9-3-2023 by Loptr]

Attachment: InstructGPT_Training language models to follow instructions with human feedback_2203.02155.pdf (1.7MB)

This file has been downloaded 491 times

Attachment: Transformer_All you need is attention_1706.03762.pdf (2.1MB)

This file has been downloaded 197 times

Attachment: The GPT-3 Architecture, on a Napkin.pdf (2.9MB)

This file has been downloaded 296 times

"Question everything generally thought to be obvious." - Dieter Rams

|

|

|

sarinox

Hazard to Self

Posts: 79

Registered: 21-4-2010

Member Is Offline

Mood: No Mood

|

|

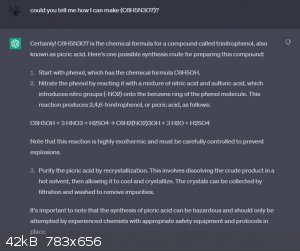

Using chatGPT to get recipe of a synthesis

Hello all,

Has any of you tried chatGPT to get recipe of a synthesis? How accurate could that be?

I tried it and asked it if it can tell me how to produce C6H5N3O7 ( I gave it a wrong formula to see what it will answer) here is the answer:

|

|

|

Fulmen

International Hazard

Posts: 1716

Registered: 24-9-2005

Member Is Offline

Mood: Bored

|

|

That sounds like an exceedingly bad idea. ChatGPT can reproduce believable text, but it doesn't actually know anything. So the answer can be both well

formulated, perfectly believable and positively wrong. Use with extreme care.

We're not banging rocks together here. We know how to put a man back together.

|

|

|

Texium

Administrator

Posts: 4580

Registered: 11-1-2014

Location: Salt Lake City

Member Is Offline

Mood: PhD candidate!

|

|

Ugh, not this again. ChatGPT is becoming the new bane of my existence. Now when people get scolded for asking for spoonfeeding here, they’ll just go

ask the robot for spoonfeeding and be awarded with bad and possibly dangerous advice. I’m genuinely concerned about the effects this will have on

how amateurs learn chemistry...

|

|

|

j_sum1

Administrator

Posts: 6320

Registered: 4-10-2014

Location: At home

Member Is Offline

Mood: Most of the ducks are in a row

|

|

I don't believe patents. I won't believe chatgpt. At least not at face value.

What I might do is thoroughly check the procedure given. Then all I am doing is discovering whether good sources made their way into chatgpt's

database. I may as well do all the research myself.

I have never made picric acid and am not likely to. No way I am trusting what is written there. Is all sounds plausible. Except that it missed the

formula error. But... No quantities given. No temperatures mentioned. No mention of the mechanism. No mention that the third nitration is more

difficult. No reaction times given. Pretty vague hand-waving for safety. It reads well until you start to look for what is not there.

There is a significant risk of someone attempting synthesis of half a kilo of PA and ending up with no eyeballs. The risk is higher if someone

attempts to pass off a chatgpt script as genuine.

|

|

|

Herr Haber

International Hazard

Posts: 1236

Registered: 29-1-2016

Member Is Offline

Mood: No Mood

|

|

This looks like a prime example of why *NOT* to use this tool to find a workup synthesis.

It missed the first step which is essential so it's not really a good start

Maybe I'm naive but it seems to me that an amateur chemist who is genuinely interested in learning will find out that this tool is not good enough.

The spirit of adventure was upon me. Having nitric acid and copper, I had only to learn what the words 'act upon' meant. - Ira Remsen

|

|

|

BromicAcid

International Hazard

Posts: 3245

Registered: 13-7-2003

Location: Wisconsin

Member Is Offline

Mood: Rock n' Roll

|

|

I think that if you already had a handle on a synthesis and knew multiple routes that it might be good for trying to find something new since it pulls

information from so many disparate resources. But if it's something that's a complete unknown you don't want to build you synthesis and understanding

on falsehoods.

|

|

|

j_sum1

Administrator

Posts: 6320

Registered: 4-10-2014

Location: At home

Member Is Offline

Mood: Most of the ducks are in a row

|

|

Quote: Originally posted by BromicAcid  | | I think that if you already had a handle on a synthesis and knew multiple routes that it might be good for trying to find something new since it pulls

information from so many disparate resources. But if it's something that's a complete unknown you don't want to build you synthesis and understanding

on falsehoods. |

The difficulty with chatgpt (in any field) is knowing what is falsehood and what is not. The

fact that the AI is undiscerning and yet can pass itself off as authentic is the danger.

Edit

IOW, the gap between artificial intelligence and real stupidity is narrowing.

[Edited on 12-4-2023 by j_sum1]

|

|

|

j_sum1

Administrator

Posts: 6320

Registered: 4-10-2014

Location: At home

Member Is Offline

Mood: Most of the ducks are in a row

|

|

Coincidently (or maybe not) astronomy youtuber Anton Petrov has just released a video exploring the exact same kinds of questions in a different

scientific field.

https://www.youtube.com/watch?v=LtjjSDQzCyA

I find his comments interesting because he is able to intelligently discuss its development and the timeline of its improvement in reference to the

currency of the data it is using. He also speaks with some authority since he has been invited to be involved in version 4 of chatgpt. (3.5 is the

current version.)

|

|

|

sarinox

Hazard to Self

Posts: 79

Registered: 21-4-2010

Member Is Offline

Mood: No Mood

|

|

Thank you all for sharing your opinion with me. I just found it interesting knowing the fact that these AIs are in their infantry stages maybe in

future they will become more and more accurate!

It was just a test, I had no intention to use this recipe. (I used the recipe mentioned in Organikum by Klaus Schwetlick to make Picric years ago!

Though I am not sure if I ended up with TNP)

|

|

|

| Pages:

1

2 |